Amid the global pandemic, the value of Big Data development has increased. Leaders must take rapid decisions about controlling costs and maintaining liquidity. What’s more, Big Data analytics helps to reduce expenditures, mitigate risks, and increase operational efficiency.

According to Forbes statistics on Big Data development, 79% of executives agree that companies will perish unless they embrace Big Data.

How to make Big Data development work for you? What are the risks and how to mitigate them? In this post, we are going to answer these and many other questions. As a data-driven company that has helped enterprises and Fortune 500 companies launch and scale their Data Analytics directions, we’ll dive deeper into trends, challenges, best practices, and success stories of Big Data development.

Trends of Big Data development:

-

Real-time analytics

Businesses are increasingly looking to adopt real-time analytics as it allows reacting instantly to a specific real-time event. For example, it is used for fraud analytics to flag suspicious behaviour (e.g withdrawing 10, 000 $ from a credit card). It can be used for recommendations and bundle offers to boost sales. Also, real-time analytics is widely used for improving user experience.

For example, thanks to real-time analytics, our client Gogo, a global in-flight internet provider, was able to monitor the quality of satellite antennas signals in real time, switch off the antennas with a bad signal, and switch on antennas with a better signal, thus providing uninterrupted user experience.

What you need for quality real-time analytics:

- Big Data developers who are proficient with Kafka and Kinesis.

- Quality data, good data sources, and a clear understanding of the business processes, as well as the understanding of how the changes in the data can affect the operational processes.

- Building a data warehouse or a data lake to have the real-time data collected, stored, and processed to be used later on for predictions, market segmentation, and making more long-term and strategic decisions. Also, you will need a proper Data Lake for retraining your Data Science/Machine learning models.

2. Data management automation

To improve the quality of data warehouse management and enhance ETL (Extract Transform Load) solutions, many businesses apply AI/Machine Learning models that can help you remove data duplications or fill in the missing data, thus making the ultimate results more accurate.

3. Data Security/ Governance

To ensure data security, our specialists recommend following these best practices of Big Data development:

- Implement static code analyzers

- Implement vulnerability scans for third-party libraries.

- Check whether traffic is encrypted and whether sensitive data is cached

We help companies implement these security mechanisms both for data stored in the cloud and the data stored on-premises.

4. Multi-cloud, hybrid cloud, and cloud-agnostic for Big Data development

Multi-cloud and cloud-agnostic allow companies to enjoy the scalability of the cloud without becoming too dependent on one cloud vendor. That allows choosing the most efficient cloud offerings and optimize the costs of your Big Data development project in the long run.

For example, for one of our clients, a Fortune 500 company, we use Snowflake for Big Data development as it is a cloud-agnostic, future-proof solution, and it allows our client to choose the cloud vendor with the best offering.

Though many companies are investing or plan to invest in Big Data development as a strategic direction, you need to have solid expertise to make it right and successfully overcome major bottlenecks.

Challenges of Big Data development

According to a McKinsey survey, when they plotted the performance figures for the 80 companies that adopted Big Data, they found that in a few of them, Big Data had a sizable impact on profits, exceeding 10 percent. Many had incremental profits of 0 to 5 percent, and a few experienced negative returns. In the same survey, those that had negative results stated as the key reasons for such returns: poor quality of data, lack of specialists, and inability to scale up their Big Data activities. Let’s look closer at these challenges and ways to solve them.

Big Data development challenge # 1. Poor quality of data

Clean, valid, complete data is the foundation of Big Data development as any discrepancies in the dataset may cause misleading results.

The solution

To ensure good quality of data, our specialists recommend following these best practices of Big Data development:

- Choose the right data sources and have the data continuously checked.

- Pay special attention to data preparation and cleaning.

- Implement automated checks for all incremental pipelines.

- Work on data governance and master data management solutions to improve the quality of the data.

Big Data development challenge # 2 Scaling Big Data activities

When implementing a Big Data solution, it is critical that the very solution and its architecture is suitable for scaling in the cloud.

The solutions

- Our Big Data specialists are often contacted by clients who have on-premise Big Data solutions that are not suitable for scaling and need to migrate them to AWS, GCP, or Azure. For example, we’ve helped an in-flight telecom provider migrate from their on-premise Big Data solutions based on Cloudera to the AWS cloud. Also, our experts have helped a global supply chain company migrate from the on-premise solution based on Hortonworks to the cloud.

- The Big Data solution may need refactoring to microservices. For instance, one of our clients, a global manufacturing company built the platform to be used by warehouse staff to efficiently allocate and manage goods and materials. However, after being in use in a few warehouses for several months, the platform turned out to have a lot of flaws and was unsuitable for further scaling. The core reason why the platform was not scalable and inefficient was its monolithic architecture. Therefore, our Solution Architect designed and presented a new cloud-native infrastructure of the platform based on Azure Kubernetes, along with the suggested tech stack and the most efficient roadmap. Refactoring to microservices allows scaling up and smooth adding of new SaaS services: anomaly detection, delivery prediction, route recommendations, object detection in logistics, OCR (optical character recognition) of labels on boxes, Natural Language Processing for document verification, data mining, and sensor data processing.

Big Data development challenge # 3. Lack of specialists

Though there are many Big Data analytics tools and platforms for different purposes, they all need some level of customization and integration into an enterprise ecosystem. What’s more, you need to have the data appropriately structured and cleaned up. Only then, you can extract real business insights from it. That’s why the demand for Big Data engineers is soaring. For example, Germany has over 30,000 professional data scientists and Big Data engineers, but the number of tech companies fighting for experts is enormous.

The solution

Therefore, many enterprises and large tech companies look for the provider in Eastern Europe. And they do it for a good reason. The number of Data Scientists and Big Data engineers here amounts to more than 150, 000, according to Linkedin, with Poland and Ukraine taking the lead.

How to choose the right experts for outsourcing Big Data development:

- Choose the location with a vast talent pool

- Settle on a Big Data development vendor with solid expertise in Big Data engineering, Data Science, ML, BI, Cloud, DevOps, and Security.

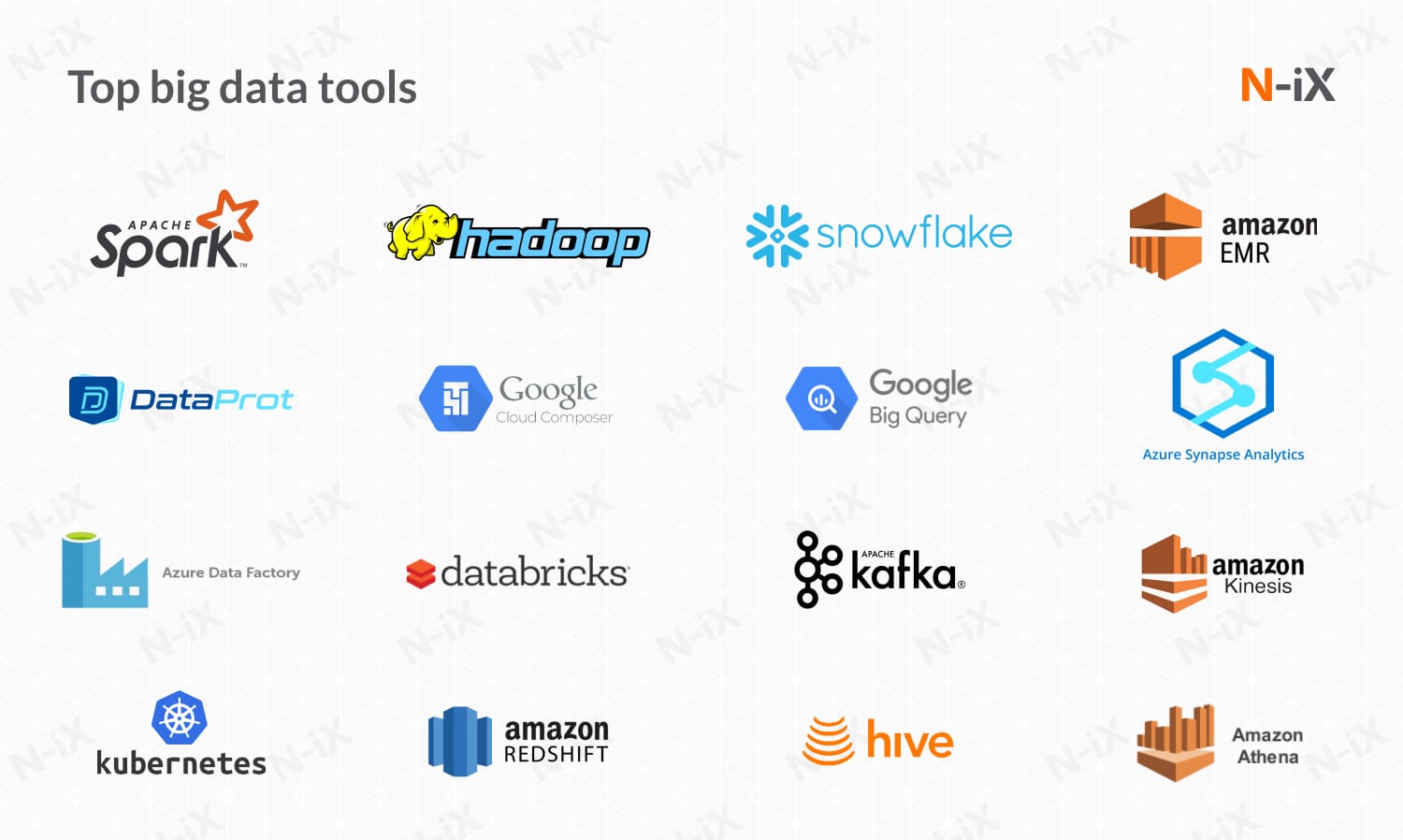

- Hire Big Data developers with expertise in:

- Hadoop ecosystem and Apache Spark. They allow large data storing and processing by distributing the computation on several nodes.

- Such cloud-based tools as Snowflake, EMR, Dataprots, Cloud Composer, BigQuery, Synapse Analytics, DataFactory, DataBricks

- SQL/NoSQL databases.

- Such Big Data tools as RedShift, Hive, Athena for querying data.

- Maintaining old MapReduce Java code and rewriting it using a more recent Spark technology.

- Scala, Python, and Java.

- Kubernetes constructs that are used to build Big Data CI/CD pipelines.

- Kafka, AWS Kinesis or Apache Pulsar for real-time Big Data streaming.

Big Data development: How to make it work for your business case

1. Establish clear business KPIs and estimate ROI

First of all, it is critical to establish clear KPI’s and calculate ROI. If you need to validate the feasibility and profitability of your Big Data analytics system, you can undertake a Business Strategy Discovery Phase and based on rigorous calculations for different scenarios, either go for integrating third-party solutions or building your own Big Data analytics system. The Product Discovery phase will provide you with all the deliverables needed to efficiently kick off the implementation phase while mitigating risks and optimizing costs.

2. Ensure effective Big Data engineering

The success of any Big Data analytics project is about:

- choosing the right data sources;

- building an orchestrated ecosystem of platforms that collect siloed data from hundreds of sources;

- cleaning, aggregating, and preprocessing the data to make it fit for a specific business case;

- in some cases, applying Data Science or Machine Learning models;

- visualizing the insights.

Before applying any algorithms or visualizing data, you need to have the data appropriately structured and cleaned up. Only then, you can further turn that data into insights. In fact, ETL (extracting, transforming, and loading) and further cleaning of the data account for around 80% of any Big Data analytics time.

Success stories of Big Data development

#1. Big Data development for improved maintenance and flawless operation of the in-flight Internet connectivity

About our client

Gogo is a global provider of in-flight broadband Internet with over 20 years of experience and more than 1,000 employees. Today, Gogo has partnerships with more than 16 commercial airlines, and it has installed in-flight connectivity technology on more than 2,900 commercial aircraft and over 6,600 business aircraft.

Business challenge: Rethinking data governance

Gogo needed to improve the quality of the in-flight Internet. Its satellite antennas often malfunctioned, which led to paying penalties to the airlines. Moreover, after the equipment was removed and checked, a lot of the reasons for antenna failures were defined as no-fault-found (NFF) ones as no anomaly was detected. And that caused unnecessary downtime and wasted costs. Therefore, Gogo initiated a complex data governance project in order to ensure the flawless operation of the equipment and high speed of the in-flight Internet.

N-iX approach: From on-premise to the cloud

First, we helped Gogo migrate their on-premise data solutions to the cloud in order to collect and process a considerable amount of data from more than 20 sources. Not only did the migration to AWS cloud expand the data processing capacity needed for further data analytics solutions, but it also saved Gogo costs spent on licenses and the on-premise infrastructure. Next, we built a cloud-based unified data platform that collects and aggregates both structured (i.e. systems uptime, latency) and unstructured data (i.e. the number of concurrent Wi-Fi sessions and video views during a flight). For this purpose, our engineers made an end-to-end delivery pipeline: from the moment when the logs come from the equipment to the moment when they are entirely analyzed, processed, and stored in the data lake on AWS.

Further, in order to predict the antenna equipment failure and reduce the no-fault-found rate (NFF), we applied the data science models, namely Gaussian Mixture Model and Regression Analysis. We set up a data retrieving process from seriously degraded antennas and correlated it with weather conditions and the antenna construction.

Benefits of the Big Data development project:

- Saving costs and enhancing user experience with predictive maintenance

- Migration to the cloud allowed our partner to reduce costs on licenses (Cloudera/Microsoft) and on-premises servers and enabled them to control and optimize resources in the cloud based on their processing needs.

- The solution allows Gogo to significantly save operational expenses on penalties to airlines for the ill-performance of Wi-Fi services.

- The no-fault-found rate was reduced by 75%, thus saving costs on unnecessary removal of equipment for servicing.

- Predictive analytics allows predicting the failure of antennas (>90 %, 20-30 days in advance) and ensures servicing of the in-flight equipment at the most suitable time, for example, when there are no flights scheduled for a plane.

- The reasons behind the ill-performance of antennas have been identified (i.e. antennas often failed after the use of antifreeze fluid). That allowed Gogo to prevent some of the typical failure causes (i.e. add an additional protection layer to the antennas).

#2. Big Data development for a leading industrial supply company

About our client

Our client is a Fortune 500 industrial supply company. It offers over 1.6 million quality in-stock products in such categories as safety, material handling, and metalworking. Also, the company provides inventory management and technical support to more than 3 million customers in North America.

Business challenge: Effective data management strategy

Being a large-scale industrial supply company, our client needed to efficiently manage large amounts of data. Therefore, the company decided to extend its data warehouse solution which collects data from multiple departments.

The company was looking to reduce Big Data development costs and outsource their Big Data engineering to a reliable vendor. Also, our client wanted to migrate the solution to the cloud to make it more scalable and cost-efficient.

N-iX approach: From PoC to production

To migrate from on-premise Hadoop Hortonworks cluster to AWS and allow processing additional data in AWS, the N-iX team built an AWS-based Big Data platform from scratch. Also, we have been involved in extending and supporting the existing Teradata solution. Teradata is used to collect data from other systems and further generate reports with Business Object and Tableau. The data sources are MS SQL, Oracle, and SAP.

To choose the data warehouse design and the tech stack that fit our client’s business needs, our specialists created a proof of concept. We compared Amazon Redshift with Snowflake and preferred Snowflake as it met the client’s approach of cloud neutrality: it can easily scale up and down any amount of computing power for any number of workloads and across any combination of clouds.

The whole development process is cloud-agnostic and is designed to ensure that the client can easily change the cloud provider in the future. For example, we use Terraform as it is compatible with all cloud vendors - AWS, Azure, and Google Cloud.

What we achieved together: Unified cloud-based Big Data platform

The project is in the development phase. Currently, our specialists are in the process of building an environment that will be able to process large datasets. The use of Snowflake and Airflow technologies allows us to automate the data extraction process. Also, Snowflake minimizes data duplication by checking whether the ingested files have been already processed or not.

After half of the year of working on the data pipeline unification, we managed to integrate more than 100 different data sources into a unified data platform. This includes daily data loads, along with a backfill of historical data. We are working with TBs of data tables, and the size is growing.

Benefits: Saving costs and more efficient Big Data management

- Saving infrastructure costs with cloud migration.

- Improved efficiency of data management thanks to the unified data platform that stores all the data in one place.

- Predictive analytics capabilities of the platform. For example, the finance department will be able to predict the inventory-related expenses.

- Using cloud-neutral technology to avoid vendor lock-in and benefit from different cloud providers.

Why choose N-iX for your Big Data development project

- N-iX boasts an internal pool of 2,200+ experts and a team of 200+ data analytics specialists.

- Our specialists have solid expertise in the most relevant tech stack for implementing Big Data, Business Intelligence, Data Science, AI/Machine Learning solutions (including Computer Vision).

- N-iX partners with Fortune 500 companies to help them launch Big Data projects and migrate their data to the cloud.

- Our Big Data experts have experience working with open source Big Data technologies (both on-premise and cloud-based) such as Apache Spark, Hadoop, Kafka, Flink, Snowflake, Airflow, etc.

- N-iX complies with international regulations and security norms, including ISO 27001:2013, PCI DSS, ISO 9001:2015, GDPR, and HIPAA, so your sensitive data will always be safe.

Have a question?

Speak to an expert