According to the BCG AI Radar 2025 Survey, 66% of executives worldwide consider data privacy and security as a top risk when implementing AI solutions in their organizations. What are the most common AI privacy concerns businesses face, and how to overcome them? We gathered the key insights with security expert guidance to help you navigate the complexities of AI while safeguarding privacy and building trust.

Key privacy issues with AI usage

Privacy issues with Artificial Intelligence often arise when handling sensitive or personal information, increasing the risk of unauthorized access, misuse, or exposure. These concerns include:

Lack of transparency and explainability

AI models may operate with opaque data sharing practices, leaving users unaware of when and how their personal information is being collected and processed. This undermines trust between consumers and organizations, putting the privacy of personal information at risk. Without clear consent and understanding, people can be exposed to targeted advertising, unwanted profiling, and even identity theft. This results in a breach of privacy rights and non-compliance with regulations such as the GDPR (General Data Protection Regulation) or CCPA (California Consumer Privacy Act), especially when the agreement is ambiguous or obtained improperly.

Surveillance and facial recognition

AI-powered surveillance, such as facial recognition, raises concerns about mass tracking and potential misuse by attackers. These systems can infringe on individual privacy, enabling intrusive monitoring without consent and posing risks of misuse.

Predictive harm and inferred data

AI systems can infer sensitive information from seemingly innocuous data, such as predicting health conditions, financial status, or political affiliations. These predictions may harm individuals if misused, especially if they are inaccurate or obtained without the subject's knowledge.

Group privacy and autonomy risks

AI can target groups based on shared characteristics, infringing on collective privacy. For example, predictive policing or targeted advertising might exploit patterns in group behavior, leading to stigmatization or restrictions on group autonomy.

Bias and discrimination concerns

AI systems trained on biased datasets can perpetuate or amplify discrimination. This not only harms individuals but also affects public trust in AI technologies, particularly when sensitive data, such as race, gender, or religion, is involved.

Data leakage and exfiltration

AI systems often handle vast amounts of personal and proprietary information, making them attractive targets for attackers. Data leakage or unauthorized access can lead to significant privacy breaches, legal consequences, and financial losses.

Top 7 practical ways to protect privacy using AI

Protecting personal information requires proactive measures. To secure your IT systems while using AI and comply with data standards, our security engineers share practical tips to help you safeguard privacy.

1. Set up strong data governance policies

Effective AI data governance requires clearly defined rules, responsibilities, and roles for data processing by artificial intelligence. Implement strong policies to define who has access to what data, how data should be handled, and under what circumstances it can be shared or processed. This helps ensure compliance with relevant privacy regulations, such as GDPR or CCPA, and fosters overall transparency across AI systems.

2. Implement privacy by design

Privacy by design principle refers to the integration of privacy and data security measures into the development of products, services, or systems from the outset. This approach requires anticipating and preventing AI privacy concerns throughout the model's lifecycle. To complement the privacy by design principle, our security engineers suggest implementing these practices:

- Conducting AI-specific Data Protection Impact Assessments (DPIAs) to identify risks before processing begins;

- Creating transparency statements that clearly explain how AI systems generate outputs;

- Addressing ethical considerations in system documentation.

3. Limit and minimize data usage

For more secure AI data management, it is crucial to follow the principle of data minimization by collecting only information necessary for specific functions. Assess the system's objectives to limit data scope, conduct regular audits to eliminate unnecessary information, and tie collection to well-documented purposes. Additionally, fostering privacy awareness among stakeholders helps address AI privacy concerns, ensuring that data limitation is prioritized at every stage of product development and deployment.

4. Prioritize anonymization and differential privacy

Utilize data anonymization to remove or alter personally identifiable information (PII) from datasets so that employees cannot be directly linked to the data while still retaining its utility for AI training. To strengthen anonymization, our security engineers suggest complementing it with differential privacy. This adds controlled noise to data or query results, ensuring that the output of an AI model does not reveal specific details about any individual, even if it is indirectly inferred. Anonymization, along with differential privacy, can enable organizations to leverage large datasets for model training while significantly reducing AI privacy risks.

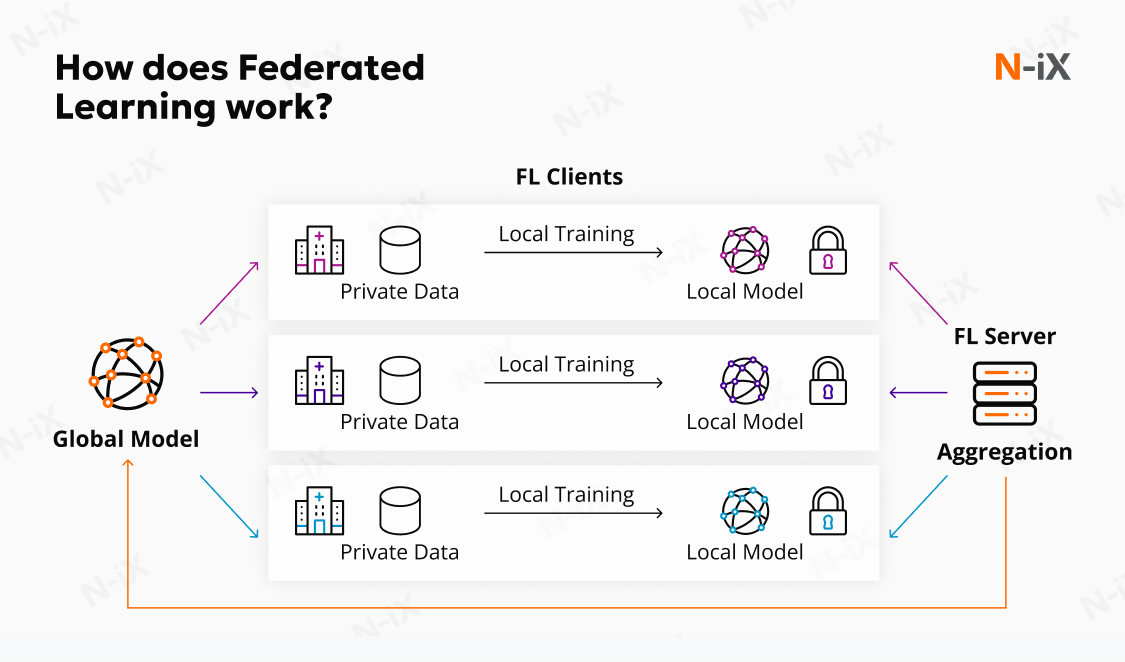

5. Implement federated learning

Federated learning is an innovative approach to overcoming AI privacy issues, as it trains models on decentralized data without transferring it to a central server. To follow this approach, keep your data on devices, allowing only model updates or aggregated insights to be shared with the central system. This method minimizes data exposure, reduces the risk of centralized breaches, and enables privacy-preserving collaboration among multiple parties, making it ideal for processing sensitive data.

6. Strengthen access control and authentication

Access control and authentication guarantee that only permitted users or systems can interact with sensitive data or AI infrastructure. To limit permissions according to job functions, implement strong password policies, multi-factor authentication (MFA), and role-based access control (RBAC).

Authentication also extends to securing API endpoints and cloud environments where AI systems operate. By enforcing strict access protocols, you can prevent unauthorized entry, reduce the risk of insider threats, and protect the integrity of data used in AI processes, maintaining confidentiality and privacy.

7. Conduct regular security and compliance audits

One of the best practices for overcoming AI privacy and security concerns is conducting regular security audits and compliance checks. Evaluate AI systems systematically and identify vulnerabilities, misconfigurations, or non-compliance with privacy regulations. Our security engineers also suggest conducting automated security assessments, complemented by penetration testing to simulate cyberattacks. This involves reviewing access logs for unusual activity and assessing encryption strength.

Compliance checks ensure alignment with regulations like GDPR, HIPAA, or CCPA, which often mandate specific data protection standards. For instance, an audit might reveal that an AI system retains user data longer than necessary, prompting corrective action. Conduct these assessments frequently to address issues proactively, adapt to evolving threats, and demonstrate a commitment to privacy regulations.

Conclusion

AI privacy concerns will continue to evolve with technological advancements. However, effective tools and strategies can help you protect personal data and comply with security standards.

Digital privacy depends on understanding risks and implementing appropriate protections. Start with small changes: review your privacy settings, question unnecessary data collection, and partner with companies that demonstrate responsible AI practices.

At the beginning of your artificial intelligence journey, it is challenging to deal with AI privacy issues. Partnering with an experienced consultant can help you navigate this complex landscape. With extensive expertise and practical strategies, your AI consultant will help you balance between technological advancement and security.

How N-iX helps mitigate AI privacy concerns

N-iX offers AI, data governance, and security services that help companies address AI data privacy issues. With GDPR, CCPA, SOC, and other data regulations compliance, N-iX provides expert guidance in ethical AI development.

Our team of data and security consultants has extensive expertise in addressing potential biases, privacy vulnerabilities, and transparency issues in AI systems. We established clear, actionable guidelines ensuring responsible AI usage for over 60 companies working with data and artificial intelligence.

With over 22 years of experience, N-iX places data privacy, security, and compliance at the center of every security strategy. Start your privacy audit with us today, and let's protect your AI initiatives together.

Have a question?

Speak to an expert